Greetings, and welcome to another Dojo. Ever wonder what goes into modelling amps and pedals? Typically, when guitarists talk about whether an amp model “sounds real,” we describe it in tonal terms—gain, EQ, distortion character. But great tone is the successful solution to a complex, essentially time-based problem.

Without getting needlessly technical, an amplifier is not a static filter that reshapes frequency content and stops there. It is a dynamic system that responds differently depending on what just happened, what is happening, and how much energy (a.k.a voltage) is being pushed through it. For modeling technology to work convincingly, it has to track complex changes over time, not just measure a snapshot of a moment of “tone.”

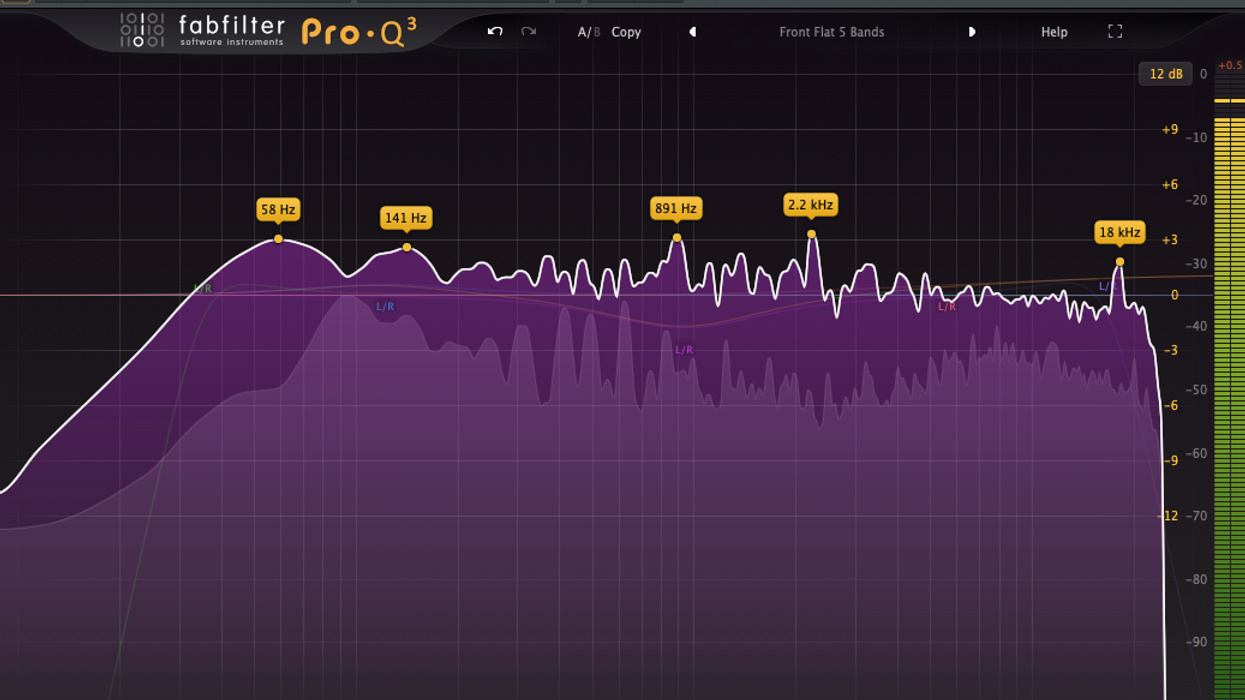

Early amp-modeling systems struggled because they focused primarily on spectral snapshots—what an amp sounds like at a given setting under a narrowly dynamic input level. Those snapshots, while accurate, were often remarkably limited. Real amplifiers don’t remain in one state long enough for a snapshot to matter. They are in constantly transitioning states.

Put your brain in slow motion for a moment. When a string is struck, the initial transient carries enormous energy. Harmonics bloom unevenly, as the fundamental note(s) emerge. Pick material and attack angle alter transient shape before the signal ever reaches the guitar— think fingerstyle versus pick. Also, string gauge and tension alter harmonic emphasis and decay. Pickups engage with the string differently depending on design and height. Guitar wiring and controls shape impedance and bandwidth. Cable capacitance subtly alters high-frequency behavior long before any gain stage is involved. By the time the signal hits the amp, it already contains a history.

What then reaches the amp input is already the result of a complex upstream system, and inside the amplifier, that history continues to develop. Preamp stages interact with tone stacks in non-linear ways. Gain staging determines not only how much distortion occurs, but where it occurs. Effects loops interrupt the signal path at predetermined stages, altering how time-based effects are compressed and re-energized. As gain stages are pushed, harmonic content doesn’t simply increase—it redistributes.

“Convincing modeling can’t focus solely on recreating individual elements in isolation.”

Then comes the power amp—arguably the most misunderstood contributor to feel. Power amps do not simply make things louder. They can also compress under load, recover, and compress again. They respond differently depending on frequency content and sustained energy.

Finally, the speaker and cabinet take over, and physics takes the wheel. Speakers have inherent inertia and wildly different efficiency curves depending on materials and volume. Cabinets add another layer, with resonant frequencies shaped by their dimensions, storing and releasing energy at varying rates.

Now put your brain back into normal speed. Our ear perceives this entire chain as a single event!

A convincing “model” must pass a variety of static and dynamic signals through the real device, measuring input-output behavior, and then build DSP and machine learning to match those behaviors accounting for how dynamic range, harmonic density, compression, and spectral contour shift together across the time domain.

Our ear is exquisitely sensitive to these changes, particularly to the rate at which they occur. Too fast, and the modeled sound comes off stiff. Too slow, and it feels detached.

This is why convincing modeling can’t focus solely on recreating individual elements in isolation. What matters is gestalt behavior: how the whole system responds as energy flows through it, moment to moment.

How do modelers do this? Most developers—Neural DSP, Universal Audio, Kemper, Fractal, Line 6, and others—blend multiple approaches. These typically include running a range of static and dynamic signals through the real device to measure input–output behavior, isolate artifacts, and quantify nonlinear characteristics; circuit analysis (where designers model each component’s behavior mathematically and derive a transfer function for DSP implementation); and extensive listening tests. Increasingly, machine-learning models are trained and iterated to capture the unit’s behavior across multiple control settings, with each pass refining the results.

For players, this means that now more than ever we can enjoy legendary amp and pedal tones in powerful, highly portable hardware or in software form—along with tremendous flexibility when recording, consistent “perfect tone” performance (no tubes or speakers to fail), and the ability to be deeply creative with our tonal palette by mixing amps, speaker cabinets, microphones, and even modeled room environments.

Am I giving up my real amps? Never. But I’m completely comfortable using either technology as I see fit—and enjoying what each brings to the process. Until next time, namaste.